There are several equivalent ways to introduce determinants — none of which are easily motivated. We prefer to define determinants through the properties they satisfy rather than by formula. These properties actually enable us to compute determinants of matrices where , which further justifies the approach. Later on, we will give an inductive formula (??) for computing the determinant.

- If is lower triangular, then the determinant of is the product of the diagonal entries; that is,

- .

- Let be an matrix. Then

We will show that it is possible to compute the determinant of any matrix using Definition ??. Here we present a few examples:

- Let be a scalar. Then .

- If all of the entries in either a row or a column of are zero, then .

- Proof

- (a) Note that Definition ??(a) implies that . It follows from (??) that

(b) Definition ??(b) implies that it suffices to prove this assertion when one row of is zero. Suppose that the row of is zero. Let be an diagonal matrix with a in every diagonal entry except the diagonal entry which is . A matrix calculation shows that . It follows from Definition ??(a) that and from (??) that .

Determinants of Matrices

Before discussing how to compute determinants, we discuss the special case of matrices. Recall from (??) of Section ?? that when we defined

We check that (??) satisfies the three properties in Definition ??. Observe that when is lower triangular, then and . So (a) is satisfied. It is straightforward to verify (b). We already verified (c) in Chapter ??, Proposition ??.It is less obvious perhaps — but true nonetheless — that the three properties of actually force the determinant of matrices to be given by formula (??). We begin by showing that Definition ?? implies that

We verify this by observing that Hence property (c), (a) and (b) imply that It is helpful to interpret the matrices in (??) as elementary row operations. Then (??) states that swapping two rows in a matrix is the same as performing the following row operations in order:- add the row to the row;

- multiply the row by ;

- add the row to the row; and

- subtract the row from the row.

Suppose that . Then It follows from properties (c), (b) and (a) that as claimed.

Now suppose that and note that Using (??) we see that as desired.

We have verified that the only possible determinant function for matrices is the determinant function defined by (??).

Row Operations are Invertible Matrices

- Proof

- First consider multiplying the row of by the nonzero constant . Let

be the diagonal matrix whose entry on the diagonal is and whose other diagonal

entries are . Then the matrix is just the matrix obtained from by multiplying

the row of by . Note that is invertible when and that is the diagonal matrix

whose entry is and whose other diagonal entries are . For example multiplies

the row by .

Next we show that the elementary row operation that swaps two rows may also be thought of as matrix multiplication. Let be the matrix that deviates from the identity matrix by changing in the four entries:

A calculation shows that is the matrix obtained from by swapping the and rows. For example, which swaps the and rows. Another calculation shows that and hence that is invertible since .Finally, we claim that adding times the row of to the row of can be viewed as matrix multiplication. Let be the matrix all of whose entries are except for the entry in the row and column which is . Then has the property that is the matrix obtained by adding times the row of to the row. We can verify by multiplication that is invertible and that . More precisely, since for . For example,

adds times the row to the row.

Determinants of Elementary Row Matrices

- The determinant of a swap matrix is .

- The determinant of the matrix that adds a multiple of one row to another is .

- The determinant of the matrix that multiplies one row by is .

- Proof

- The matrix that swaps the row with the row is the matrix whose

nonzero elements are where and . Using a similar argument as in (??) we see

that the determinants of these matrices are equal to .

The matrix that adds a multiple of one row to another is triangular (either upper or lower) and has ’s on the diagonal. Thus property (a) in Definition ?? implies that the determinants of these matrices are equal to .

Finally, the matrix that multiplies the row by is a diagonal matrix all of whose diagonal entries are except for . Again property (a) implies that the determinant of this matrix is .

Computation of Determinants

We now show how to compute the determinant of any matrix using elementary row operations and Definition ??. It follows from Proposition ?? that every elementary row operation on may be performed by premultiplying by an elementary row matrix.

For each matrix there is a unique reduced echelon form matrix and a sequence of elementary row matrices such that

It follows from Definition ??(c) that we can compute the determinant of once we know the determinants of reduced echelon form matrices and the determinants of elementary row matrices. In particularIt is easy to compute the determinant of any matrix in reduced echelon form using Definition ??(a) since all reduced echelon form matrices are upper triangular. Lemma ?? tells us how to compute the determinants of elementary row matrices. This discussion proves:

We still need to show that determinant functions exist when . More precisely, we know that the reduced echelon form matrix is uniquely defined from (Chapter ??, Theorem ??), but there is more than one way to perform elementary row operations on to get to . Thus, we can write in the form (??) in many different ways, and these different decompositions might lead to different values for . (They don’t.)

An Example of Determinants by Row Reduction

As a practical matter we row reduce a square matrix by premultiplying by an elementary row matrix . Thus

We use this approach to compute the determinant of the matrix The idea is to use (??) to keep track of the determinant while row reducing to upper triangular form. For instance, swapping rows changes the sign of the determinant; so Adding multiples of one row to another leaves the determinant unchanged; so Multiplying a row by a scalar corresponds to an elementary row matrix whose determinant is . To make sure that we do not change the value of , we have to divide the determinant by as we multiply a row of by . So as we divide the second row of the matrix by , we multiply the whole result by , obtaining We continue row reduction by zeroing out the last two entries in the column, obtainingDeterminants and Inverses

We end this subsection with an important observation about the determinant function. This observation generalizes to dimension Corollary ?? of Chapter ??.

- Proof

- If is invertible, then Thus and (??) is valid. In particular, the

determinants of elementary row matrices are nonzero, since they are all

invertible. (This point was proved by direct calculation in Lemma ??.)

If is singular, then is row equivalent to a non-identity reduced echelon form matrix whose determinant is zero (since is upper triangular and its last diagonal entry is zero). So it follows from (??) that Since , it follows that .

An Inductive Formula for Determinants

In this subsection we present an inductive formula for the determinant — that is, we assume that the determinant is known for square matrices and use this formula to define the determinant for matrices. This inductive formula is called expansion by cofactors.

Let be an matrix. Let be the matrix formed from by deleting the row and the column. The matrices are called cofactor matrices of .

Inductively we define the determinant of an matrix by:

In Appendix ?? we show that the determinant function defined by (??) satisfies all properties of a determinant function. Formula (??) is also called expansion by cofactors along the row, since the are taken from the row of . Since , it follows that if (??) is valid as an inductive definition of determinant, then expansion by cofactors along the column is also valid. That is,

We now explore some of the consequences of this definition, beginning with determinants of small matrices. For example, Definition ??(a) implies that the determinant of a matrix is just Therefore, using (??), the determinant of a matrix is: which is just the formula for determinants of matrices given in (??).

Similarly, we can now find a formula for the determinant of matrices as follows.

As an example, compute using formula (??) as

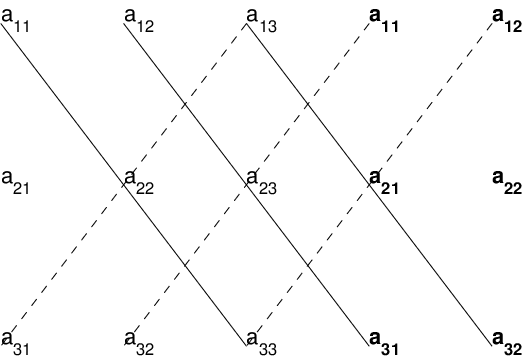

There is a visual mnemonic for remembering how to compute the six terms in formula (??) for the determinant of matrices. Write the matrix as a array by repeating the first two columns, as shown in bold face in Figure ??:

Then add the product of terms connected by solid lines sloping down and to the right and subtract the products of terms connected by dashed lines sloping up and to the right. Warning: this nice crisscross algorithm for computing determinants of matrices does not generalize to matrices.

When computing determinants of matrices when , it is usually more efficient to compute the determinant using row reduction rather than by using formula (??). In the appendix to this chapter, Section ??, we verify that formula (??) actually satisfies the three properties of a determinant, thus completing the proof of Theorem ??.

An interesting and useful formula for reducing the effort in computing determinants is given by the following formula.

- Proof

- We prove this result using (??) coupled with induction. Assume that this

lemma is valid or all matrices of the appropriate form. Now use (??) to compute

Note that the cofactor matrices are obtained from by deleting the row and the column. These matrices all have the form where is obtained from by deleting the column. By induction on It follows that

as desired.

Determinants in MATLAB

The determinant function has been preprogrammed in MATLAB and is quite easy to use. For example, typing e8_1_11 will load the matrix

Prove Cramer’s rule. Hint: Let be the column of so that . Show that Using this product, compute the determinant of and verify (??).