We’ve seen how we can optimize a function subject to a constraint using substitution, and we’ve seen that it can be very difficult to correctly handle the constraints! Fortunately, there is a tool that we can use to simplify this process. This tool is called “Lagrange multipliers.”

Gradients

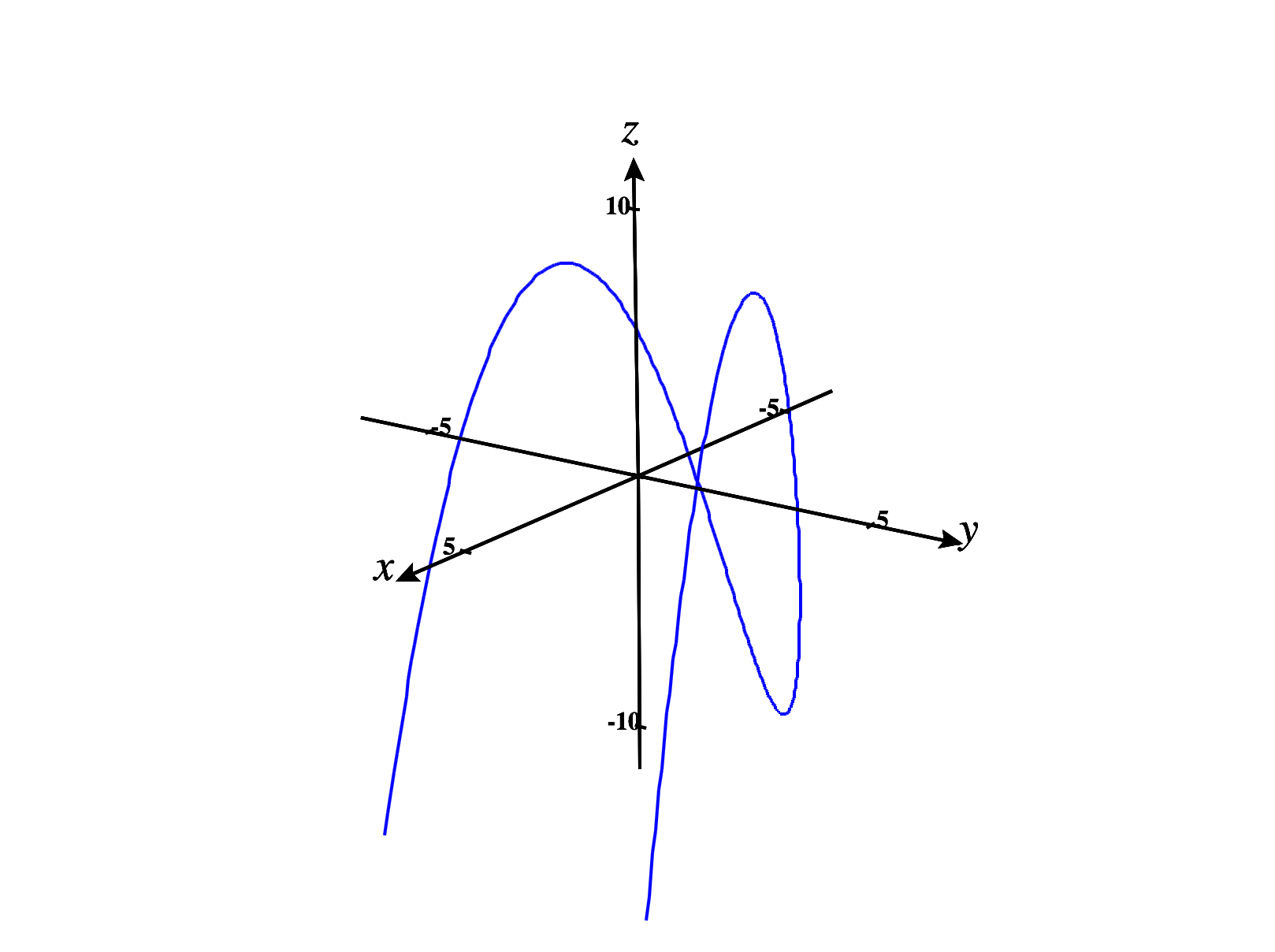

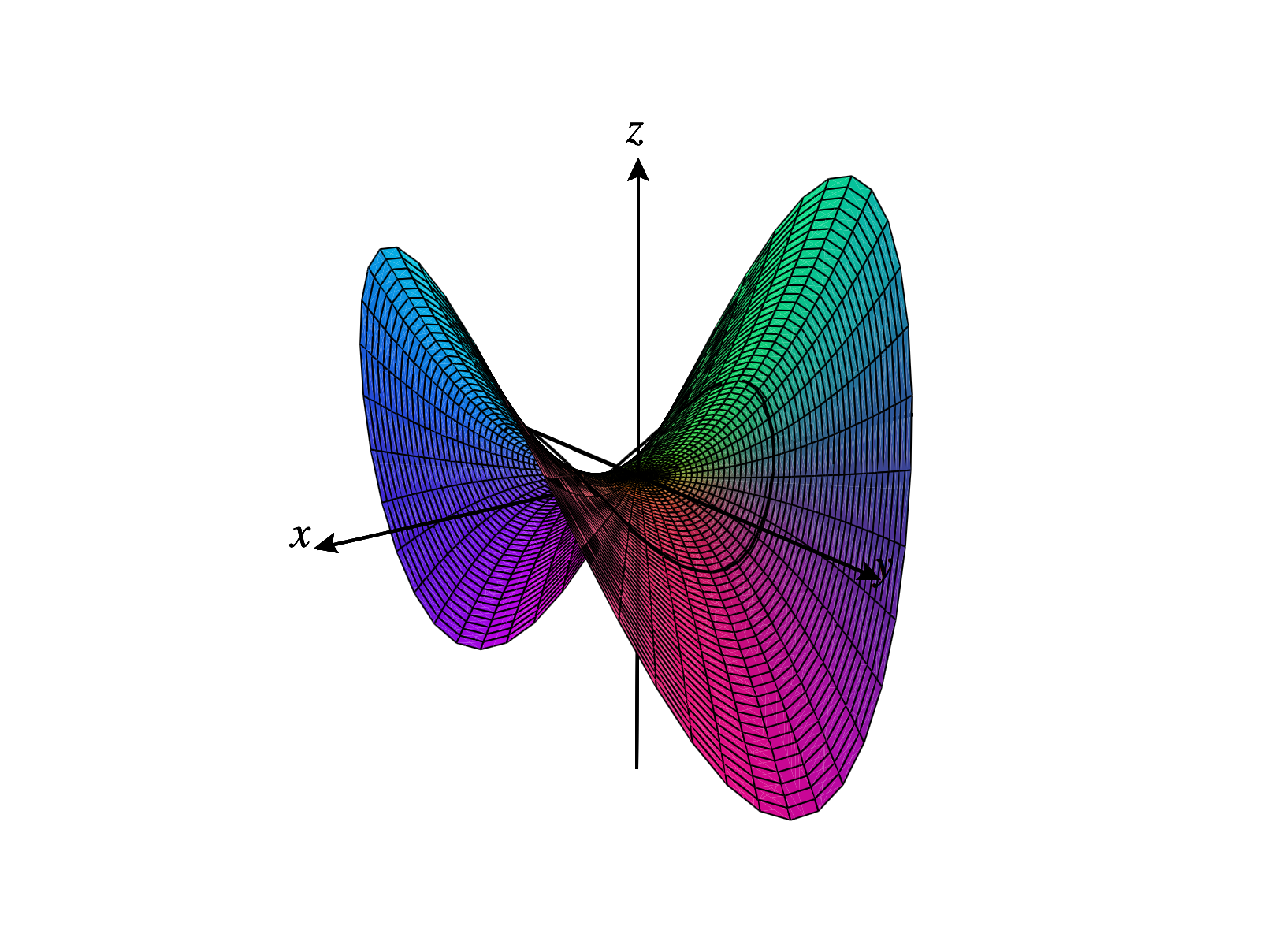

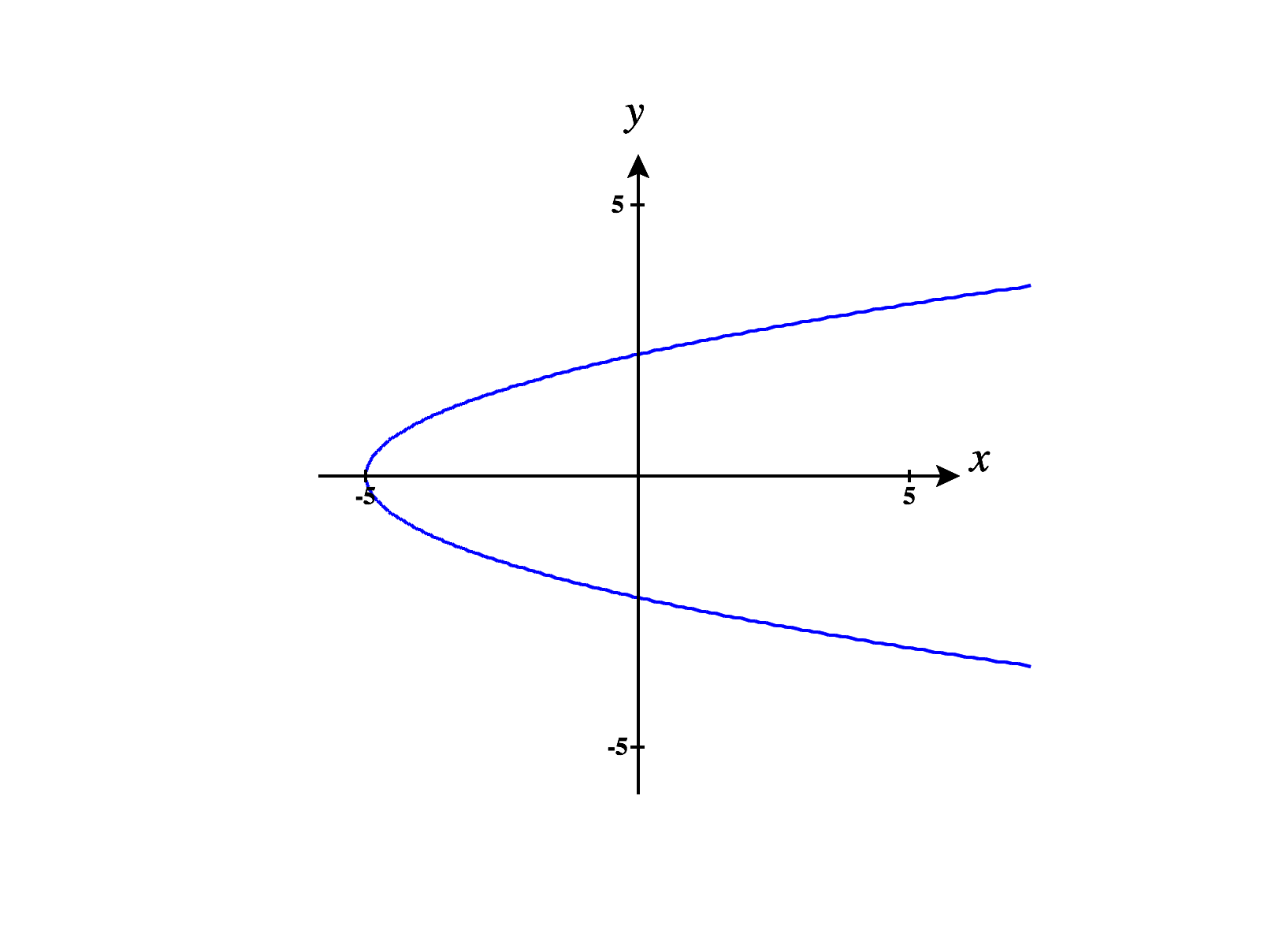

Suppose we wish to optimize a function subject to some constraint , where is a constant, and is a function. For now, we’ll focus on the case , so we have a function and a constraint . The graph of will be a surface in ,

and the graph of is a curve in .

Now, suppose that the maximum value of subject to the constraint occurs at some point . Suppose we parametrize the curve as , with . We can view as a level curve of the function , and then the gradient of will always be perpendicular to the curve .

More precisely, for any point on the curve , we’ll have is perpendicular to . That is, for all .

Next, let’s turn our attention back to . If has an absolute maximum subject to , then has an absolute maximum at . This means that has a critical point at , so . Using the chain rule, we can rewrite this as So, both and are perpendicular to . Since we are considering vectors in , this means that and are parallel, so we can write for some constant .

So, we can find candidate points for the absolute maximum (and similarly, the absolute minimum) of subject to the constraint by finding points where This observation generalizes to .

We can leverage this theorem into a method for finding absolute extrema of a function subject to a constraint, which we call the method of Lagrange multipliers.

To find the absolute extrema of a function subject to a constraint :

- (a)

- Compute the gradients and .

- (b)

- Solve the system of equations for and .

- (c)

- The solutions to the system of equations in (2) are the critical points of

subject to . Classify these critical points in order to determine the absolute

extrema.

If is compact, we can determine the absolute extrema by comparing the values of at the critical points. In this case, absolute extrema are guaranteed to exist by the Extreme Value Theorem.

We’ll see how this process works in a couple of examples. First, we repeating an optimization problem which was previously done with substitution.

If we let , our constraint is .

Computing the gradients of and , we have

Setting up the system of equations we have

We can rewrite this as a system of three equations,

From the first equation, we have either or .

If , the third equation gives us . Thus, we obtain the critical points .

If , the second equation gives us . Then, the third equation gives us . This gives us the critical points .

Since we know that will have an absolute maximum and minimum subject to our constraint, we will compare the values at the critical points to determine the absolute maximum and minimum.

We see that the absolute maximum of subject to our constraint is , and this occurs at the points . The absolute minimum of subject to our constraint is , and this occurs at the points .

However, Lagrange multipliers will still be helpful for finding critical points. We can rewrite our constaint as , and take . So, we are optimizing subject to the constraint .

We begin by finding the gradients of and .

Next, we solve the system which is We can rewrite this system as the three equations From the second equation, we have either , or .

If , the third equation gives us . So, we have a critical point .

If , the first equation gives us . Then the third equation gives us . So, we have the critical points .

In this case, we can’t determine the absolute maximum and absolute minimum by plugging in these points, since we aren’t optimizing over a compact region.

However, looking at the graph of over the curve , we can see that the absolute maximum occurs at the points . Although there is a local minimum at , this is not an absolute minimum, as there is no absolute minimum.